The specific tenets of quantum mechanics are undoubtedly mind-boggling. Its transgressions on Einstein’s Theory of Special Relativity combined with its probabilistic nature render it almost inconceivable. Perhaps more inconceivable, however, is the idea that quantum mechanics dictates a uniquely human relationship with nature, one that places the long-avoided, subjective notion of consciousness in the objective scientific domain.

Humans as Observers

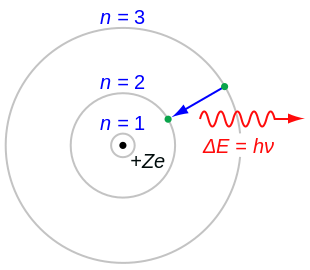

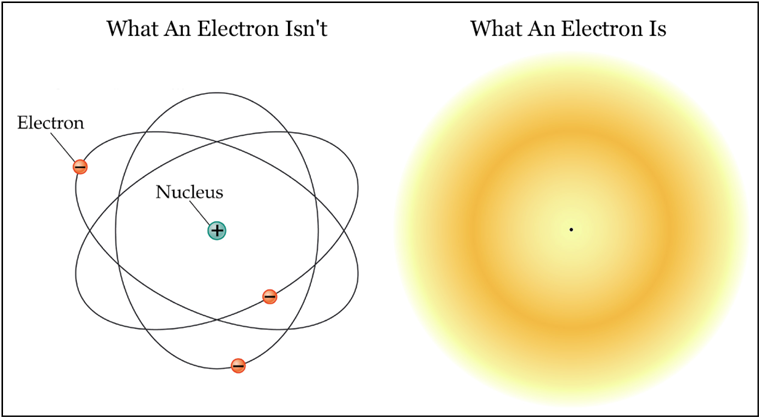

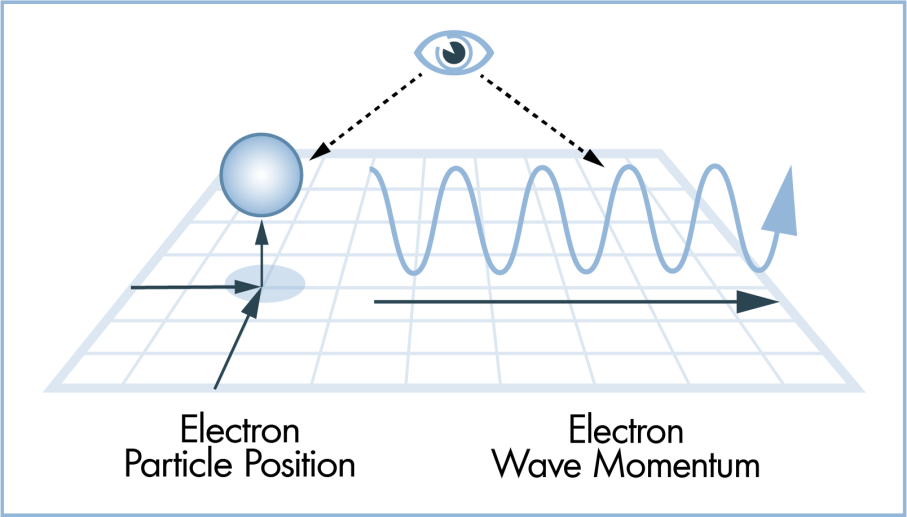

Recall that any unobserved object exhibits a superposition, having a distinct probability of existing in every temporal and spatial point until an observation of the object results in a superposition collapse (or decoherence), with larger objects simply being more unlikely—not impossible—to spontaneously tunnel through space and time. Observations typically take the form of pieces of equipment or measuring devices capturing the state of an atom or the location of the electron, but such tools are the product of human ingenuity and have their “findings” confirmed only upon human intervention.

Why does nature “choose” to revert to a distinct position or configuration exclusively when humans perform observations or measurements? Why does it not do so in the ubiquitous presence of inanimate atoms and subatomic particles? Such a phenomenon may not be a “choice” of nature but rather the result of a unique relationship between the laws of physics and human consciousness. After all, how can we definitively draw conclusions regarding the laws of nature if we are unaware of them? They may in fact exist, but to each individual oblivious of them, the lack of their projection into one’s mind warrants their nonexistence.

Such philosophical ramifications actually possess empirical validity in the realm of quantum mechanics. The profundity of consciousness is merely the result of the fact that each individual lives in his or her own reality. It is common to assert to a relatively unknowing person on the subject—often to elicit humor—that “all of this may be a dream” (i.e., that the encountered people, places, objects, ideas, and perhaps even the entire life of the respective individual may be artificial constructions of his or her mind). Indeed, when you read this sentence, how do you know that anyone else reads the same words, or even has any conception of a “word”? The mechanics of human vision notwithstanding, your superposition collapse may very well differ from that of the person next to you…

But What About Animals?

Even if one accepts the notion of human consciousness in the context of nature, he or she may rightfully ask whether intelligent and quasi-intelligent animal life exhibits the same relationship to quantum mechanics. That is, can dogs induce decoherence?

Although very few quantum physicists—and by extension, quantum philosophers—have actually investigated the inquiry, most will succinctly answer “yes.” Regardless of how the quality of their senses (sight, smell, hearing, taste, etc.) compare to ours, conscious animals—even if incapable of critical thinking—are observers and thus should be enablers of an object’s reversion into a distinct configuration.

However, just as one human’s superposition collapse and sense of reality is unique, so too is an animal’s sense of reality a mere projection of its own mind. When an animal witnesses an event away from a human, the event occurs as observed only in the mind of the animal. A separate observation from a separate entity (e.g., the external human) yields a separate reality for that entity. Thus, human and animal consciousness, the means of constituting superposition collapse, may be one and the same: a superposition collapse occurs upon an external observation by a conscious entity, with the nature of the decoherence unique to the individual (i.e., not to the species).

Entangled Minds

A uniquely human application to quantum mechanics that is often derided as pseudoscience yet has gained traction with various studies is the idea of entanglement of human minds. Recall that entanglement stipulates that the observation of one particle’s state instantly conveys the state of the entangled counterpart, even if they are separated by millions of lightyears. The idea of human mind entanglement is most notably examined in Dean Radin’s book Entangled Minds.

Image Courtesy of Amazon

Radin puts forth empirical evidence based on several observational studies and surveys that suggests that certain people exhibit behaviors such as:

- Clairvoyance (sensing ongoing events without actually observing them)

- Precognition (foresight; a sense of the future)

- Post-diction (retroactive clairvoyance)

- Telepathy

- Telekinesis

Radin qualifies that such behaviors, if verified, primarily occur among loved ones and relatives. He gives an example of a mother suddenly sensing that “something had happened” to her son as he was killed in combat in the Middle East. He also cites similar instances of one having a premonition that something will happen to a friend or family member if he or she takes a particular course of action.

Although the research is still fledgling and contingent upon parapsychology, Radin suggests that all people to whom one closely associates are subtly or notably connected to that person more deeply than through DNA or mutual affection. Radin, among other physicists, asserts that just as humans may be purveyors of quantum superposition and decoherence, so too may they be entangled through their minds.

Indeed, quantum mechanics has evolved from the successor to relativity to a new branch of neurology. Whatever the future holds for the science, it is certainly uncertain, and it can only be realized by a superposition collapse epitomized in further human (and animal) research and investigation. Will we ever fully come to terms with quantum mechanics? Perhaps, in the ironic words of quantum mechanics skeptic Albert Einstein, it will forever remain elusive and “spooky.”