I might have slight OCD when it comes to dealing with adapters. I like to remove them all 🙂 I will now provide detailed post about how to remove adapters in PE and MP reads based on my own experience. (Note that default set of adaptors provided with Trimmomatic might work just as fine for you.)

Summary: based on success of sequencing run, removing adaptors and trimming of PE reads might or might not be important. However, proper dealing with MP will have major impact on your downstream analysis and you just SHOULD pay proper attention to it.

Sanity check

Usually, forward and reverse reads come in two fastq files named as R1 and R2 (sometimes they are in one file, but I just deinterleave them since I prefer separate files). First I make sure that both of these files have same number of sequences (and hence were copied correctly). This can be done with FastQC during the same time you will check for quality of sequences and duplication levels. Next, I check that sequence length equals quality score length for each entry and validate files with fastQValidator. I also note md5sum – unique hashcode of the file (even deletion of one character will alter md5sum). This might be a bit overkill, but better find out sooner than later. I use script that looks something like this:

sanity_check.sh

#!/bin/bash

function check_data {

R1=$1;

R2=$(echo $R1 | sed ‘s/R1.fastq/R2.fastq/g’)

echo “Check if length of sequence equals quality score length”

bioawk -c fastx ‘{if (length($seq)!=length($qual)) print “Offending: ” NR}’ $R1

bioawk -c fastx ‘{if (length($seq)!=length($qual)) print “Offending: ” NR}’ $R2

echo “run FastQ Validator”

fastQValidator –file $R1

fastQValidator –file $R2

echo “Finished validation.”

echo “Check md5 sums:”;

echo `md5sum $R1`

echo `md5sum $R2`

echo “——–”

}

#validate Fastq file

echo “File: ” $1

check_data $1

Adapter detection

Now you want to know which adaptors are in your data. The easiest is probably to ask lab person that generated the data. What you will usually observe with Illumina is indexed adaptor at the beginning of your F reads (plus sometimes some fake polyA stretches that drive me a bit crazy) and universal adaptor at the end of your R reads. I usually take a look with egrep how those sequences look like and how abundant they are:

>PrefixAdapter7/1

GATCGGAAGAGCACACGTCTGAACTCCAGTCACCAGATCATCTCGTATGCCGTCTTCTGCTTG

>PrefixUniversal/2

AGATCGGAAGAGCGTCGTGTAGGGAAAGAGTGTAGATCTCGGTGGTCGCCGTATCATT

So if you used indexed adaptor 7 from Illumina you would type:

egrep –color “GATCGGAAGAGCACACGTCTGAACTCCAGTCACCAGATCATCTCGTATGCCGTCTTCTGCTTG” R1.fasta

egrep –color “AGATCGGAAGAGCGTCGTGTAGGGAAAGAGTGTAGATCTCGGTGGTCGCCGTATCATT” R2.fastq

Some adaptors might be present just partially, so this will be rather a lower boundary.

Trimming PAIRED-END reads – THEORY

Now you have an idea how many adaptors you have. My software of choice is trimmomatic, because it

- trims adaptors

- removes low quality sequences if you wish to do so

- keeps order of the reads – I usually choose to work only with cases where both F and R reads survived trimming

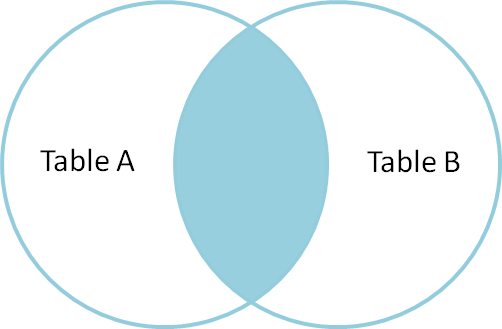

I know that a lot of people run Trimmomatic without checking which types of adaptors they actually have in the data and it often works. I would recommend reading pages 5 and 6 in Trimmomatic manual – turns out you can run your trimming in both simple or palindrome mode.

Here is the summary of that:

“With ‘simple’ trimming, each adapter sequence is tested against the reads, and if a sufficiently accurate match is detected, the read is clipped appropriately.

‘Palindrome’ trimming is specifically designed for the case of ‘reading through’ a short fragment into the adapter sequence on the other end. In this approach, the appropriate adapter sequences are ‘in silico ligated’ onto the start of the reads, and the combined adapter+read sequences, forward and reverse are aligned. If they align in a manner which indicates ‘read-through’, the forward read is clipped and the reverse read dropped (since it contains no new data).

Naming of the sequences indicates how they should be used. For ‘Palindrome’ clipping, the sequence names should both start with ‘Prefix’, and end in ‘/1’ for the forward adapter and ‘/2’ for the reverse adapter. All other sequences are checked using ‘simple’ mode. Sequences with names ending in ‘/1’ or ‘/2’ will be checked only against the forward or reverse read. Sequences not ending in ‘/1’ or ‘/2’ will be checked against both the forward and reverse read. If you want to check for the reverse-complement of a specific sequence, you need to specifically include the reverse-complemented form of the sequence as well, with another name.” http://www.usadellab.org/cms/?page=trimmomatic

For paired end reads I use palindrome mode:

>PrefixAdapter7/1

GATCGGAAGAGCACACGTCTGAACTCCAGTCACCAGATCATCTCGTATGCCGTCTTCTGCTTG

>PrefixUniversal/2

AGATCGGAAGAGCGTCGTGTAGGGAAAGAGTGTAGATCTCGGTGGTCGCCGTATCATT

For simple mode I would choose following adaptor sequences:

>Adapter7/1

GATCGGAAGAGCACACGTCTGAACTCCAGTCACCAGATCATCTCGTATGCCGTCTTCTGCTTG

>Universal/2

AGATCGGAAGAGCGTCGTGTAGGGAAAGAGTGTAGATCTCGGTGGTCGCCGTATCATT

Sometimes you don’t know which adaptor sequences you have, then I would check if any of these are present: MEDIUM_contaminant_list (please note that this list is very incomplete and might not cover new adaptor sequences from Illumina) and check them with following script:

find_cMEDIUM.sh

#!/bin/bash –

file=$1;

echo “FILE PROCESSING: ” $file;

rm -f ${file}_MEDIUMadapt_contamination_log.txt; #remove previous logs

while read line; do name=$(echo $line | cut -f1 -d’ ‘); seq=$(echo $line | cut -f2 -d’ ‘); echo -ne $(date) $name “looking for $seq\t” >>${file}_MEDIUMadapt_contamination_log.txt; fgrep -c $seq ${file} >>${file}_MEDIUMadapt_contamination_log.txt; done <MEDIUM_contaminant_list.txt

There are for sure better ways to do this, but since I am running this on every dataset just once, I never bothered to optimize 🙂

Trimming PAIRED-END reads – HANDS ON

I use script that looks like this:

#!/bin/bash

function trim_data {

R1=$1;

R2=$(echo $R1 | sed ‘s/R1/R2/g’)

adapter_file=$2

java -jar /usr/bin/biomonika/Trimmomatic-0.32/trimmomatic-0.32.jar PE -threads 60 -phred33 -trimlog ${R1}_trimlog $R1 $R2 ${R1}_trimmed.fastq ${R1}_Fsingle.fastq ${R2}_trimmed.fastq ${R1}_Rsingle.fastq ILLUMINACLIP:adaptors_sequences/${adapter_file}:2:30:10 LEADING:3 TRAILING:3 SLIDINGWINDOW:4:15 MINLEN:30;

rm ${R1}_Fsingle.fastq ${R1}_Rsingle.fastq;

}

#TRIM PAIRED END READS

trim_data PE_R1.fastq customPalindrome_7_UNI.fa

Finally, I perform similar sanity check as at the beginning to make sure that these files are ready for downstream analysis. In general, I would recommend to trim rather less than more – I have seen people trimming 15 bp from the beginning and 5 bp from the end of 100 bp reads because of small nucleotide bias in FastQC report and I don’t think it’s worth it – you will get less uniquely mapped reads since extremely short reads are prone to mapping to multiple positions. However, really bad reads should be discarded, as well as low quality bases at the read ends, but that’s different from trimming everything without looking at the actual quality scores.

Trimming MATE-PAIR reads

Here things get tricky since mate-pair reads will not only contain Illumina adaptors we are all familiar with but also biotinylated adapters. Here is the figure that explains how this works:

Data Processing of Nextera® Mate Pair Reads on Illumina Sequencing Platforms

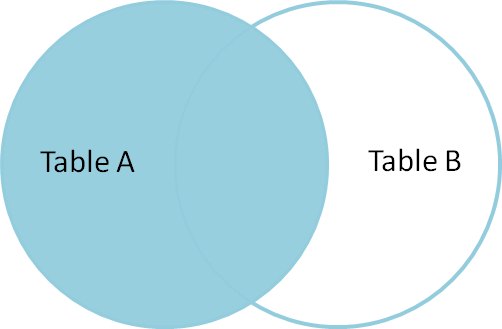

So, DNA gets circularized thanks to which fragments originally distant few kb come close together (biotinylated adapters are in green, whereas traditional Illumina adaptors are pink and gray). It all might sound too good to be true and indeed things can get a bit messy – what if your enrichment wasn’t 100 % and for some reason you ended up sequencing fragments without biotinylated adapters? Then those fragments would be only 250 bp or so apart and not few kb, right? Poor scaffolders dealing with these “fake MP” then 🙂

Here is the figure summarizing the cases that can happen:

Data Processing of Nextera® Mate Pair Reads on Illumina Sequencing Platforms

Please take careful look at the resulting orientation of these reads – PE reads are natively in FR orientation and MP reads in RF orientation (case a). This means that we are going to end up with some “paired-end contamination” in our MP libraries. In our lab, we usually experience less than ~1/3 of such contamination, which we consider OK.

In unknown reads, orientation couldn’t be determined by the position of biotinylated adaptors, but in our experience these are all MP reads (evaluated by mapping to reference and plotting insert size distribution).

I recommend using software called NxTrim to split your reads into these categories – I then recommend you continue with MP and UKNOWN subsets for scaffolding/assembly.

Biotinylated adaptors are now gone so you can go ahead and remove Illumina adaptors. Please notice that NxTrim will reverse complements reads for you so they are now going to be in FR orientation just as paired-end reads usually are. This means that you will find indexed and universal adaptors at rather unusual places.

forward reads, RC means reverse complement, numbers represent how many counts each adaptor appeared:

|

PrefixAdapter7/1 |

PrefixUniversal/2 |

RC PrefixAdapter7/1 |

RC PrefixUniversal/2 |

| R1_MP.fastq |

0 |

0 |

328669 |

0 |

|

x |

x |

end |

x |

| R1_PE.fastq |

1734 |

0 |

1 |

0 |

|

beginning |

x |

x |

x |

| R1_UNKNOWN.fastq |

0 |

0 |

2697712 |

0 |

|

x |

x |

end |

x |

reverse reads, RC means reverse complement, numbers represent how many counts each adaptor appeared:

|

PrefixAdapter7/1 |

PrefixUniversal/2 |

RC PrefixAdapter7/1 |

RC PrefixUniversal/2 |

| R2_MP.fastq |

0 |

0 |

0 |

135757 |

|

x |

x |

x |

end |

| R2_PE.fastq |

0 |

798 |

10 |

0 |

|

x |

beginning |

x |

x |

| R2_UNKNOWN.fastq |

1 |

0 |

0 |

0 |

|

x |

x |

x |

x |

It makes sense – if adaptor was at the beginning of reads before reverse complementing is now going to end up at the end of the reads.

Final code for removing adaptors for reads after NxTrim will then look like this:

#!/bin/bash

function trim_data {

R1=$1;

R2=$(echo $R1 | sed ‘s/R1.fastq/R2.fastq/g’)

adapter_file=$2

java -jar /usr/bin/biomonika/Trimmomatic-0.32/trimmomatic-0.32.jar PE -threads 60 -phred33 -trimlog ${R1}_trimlog $R1 $R2 ${R1}_trimmed.fastq ${R1}_Fsingle.fastq ${R2}_trimmed.fastq ${R1}_Rsingle.fastq ILLUMINACLIP:adaptors_sequences/${adapter_file}:2:30:10 LEADING:3 TRAILING:3 SLIDINGWINDOW:4:15 MINLEN:30;

rm ${R1}_Fsingle.fastq ${R1}_Rsingle.fastq;

}

#trim data

#TRIM MATE PAIR READS

trim_data MP_R1_mp.fastq customSimple_RC-7_RC-UNI.fa

trim_data MP_R1_pe.fastq customSimple_7_UNI.fa

trim_data MP_R1_unknown.fastq customSimple_RC-7_RC-UNI.fa

with following adaptor sequences:

customSimple_RC-7_RC-UNI.fa

>Adapter7RC/1

CAAGCAGAAGACGGCATACGAGATGATCTGGTGACTGGAGTTCAGACGTGTGCTCTTCCGATC

>UniversalRC/2

AATGATACGGCGACCACCGAGATCTACACTCTTTCCCTACACGACGCTCTTCCGATCT

customSimple_7_UNI.fa

>Adapter7/1

GATCGGAAGAGCACACGTCTGAACTCCAGTCACCAGATCATCTCGTATGCCGTCTTCTGCTTG

>Universal/2

AGATCGGAAGAGCGTCGTGTAGGGAAAGAGTGTAGATCTCGGTGGTCGCCGTATCATT

Again, if your adaptor counts are really low, you might decide to just trim for quality since removing 1,000 reads with forgotten adaptors really won’t make a difference 🙂

Additional notes

Sometimes it is believed that adapters just magically disappear because mappers and assemblers are smart (well, some of them are, e.g. some mappers will soft clip these adapter sequences automatically for you, but how can you then tell if soft-clipped sequence is adaptor or something biologically meaningful?).