Why I am Responding Here

As the head of the PSETI Center I need to address a controversy and correct some factual errors circulating about the Codes of Conduct at various PSETI-related activities, one of which led to the abstract of an early career researcher (ECR), Dr. Beatriz Villarroel, being rejected from an online conference organized by and for ECRs, the Assembly of the Order of the Octopus. This controversy has been brewing on various blog posts and social media, and recently became the subject of a lengthy email thread on the IAA SETI community mailing list.

Sexual harassment is widespread in science and academia in general, it is completely unacceptable, and, when these kinds of issues arise, our focus and priority as a community should be to protect the vulnerable members of our community.

Much of this criticism has been directed at the PSETI Center and specifically at its ECRs and former members. This discussion has also been extremely upsetting not only for these ECRs, but for researchers across the SETI community and beyond, especially those that have been victims of sexual harassment, and to minoritized researches who need to know that the community they are in or wish to join will protect them.

For these reasons and many more, these attacks warrant a response, explanation, and defense from the PSETI Center.

Background

I don’t want to misrepresent our critics’ positions. You can read Dr. Villarroel’s version of events and their context for yourself here.

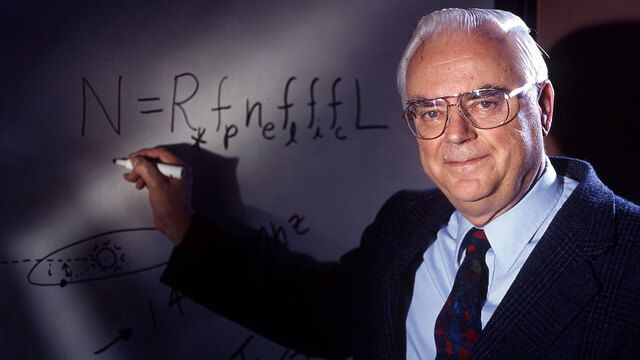

The background for this story involves Geoff Marcy, who retired from astronomy when Buzzfeed broke the story that he had violated sexual harassment policies over many years. Since then many more stories of his behavior have come to light, and the topic of whether it is appropriate to continue to work with him and include him as a co-author has come up many times. I am particularly connected with this story because Geoff was my PhD adviser and friend, and for a while I continued to have professional contact with him after the story broke. I have since ended such contact with him and apologized after discussions with my advisees and with victims of his sexual harassment gave me an understanding of why such continued association was so harmful.

This particular story begins at an online SETI conference in which Dr. Villarroel presented research she was doing in collaboration with Marcy, during which she showed his picture on a slide. This struck some attendees as gratuitous and as potentially an effort to rehabilitate Marcy’s image in the astronomical community. It also struck some as insensitive to victims of sexual harassment and assault, especially to any of Marcy’s victims that may have been in attendance.

Around this time, a group of ECRs in SETI decided to revive the old “Order of the Dolphin” from the earliest days of SETI, rechristened as the “Order of the Octopus.” This informal group of researchers builds community in the field, and the PSETI Center is happy to have provided the it some small financial and logistical support. The Order decided to meet online during the COVID pandemic in their first “Assembly.” As they wrote: “In designing the program for this conference, we are also striving to incorporate principles of inclusivity and interdisciplinarity, and to instill these values into the community from the ground up.“

I was not an organizer of the Assembly, but I think it is fair to say that they wrote their code of conduct in a way that would ensure that image and presence of any well-known harasser like Marcy would not be welcome. This effectively meant that abstracts featuring Marcy as co-author would be rejected, and that participants were asked to not gratuitously bring Marcy up in their talks.

What happened: The Assembly of the Order of the Octopus and the Penn State SETI Symposia

More than one researcher that applied to attend the 2021 Assembly of the Octopus, including Dr. Villarroel, had a history of working with Marcy. To ensure there were no misunderstandings, those applicants were told in advance that they were welcome to attend the conference provided that they abided by the aspects of the code of conduct, and the language in question was highlighted.

When the organizers of the Assembly learned that Dr. Villarroel’s abstract was based on work published with Marcy as the second author, they withdrew their invitation to present that work, but made clear she was welcome to attend and even to submit an abstract for other work.

Dr. Villarroel chose not to attend the Assembly.

Similar code of conduct language appeared in two later PSETI events, the Penn State SETI Symposia in 2022 and 2023. Dr. Villarroel did not register to attend or submit an abstract for either symposium.

What happened: The SETI.news mailing list and SETI bibliography

Another source of criticism of the PSETI Center involved me directly. At the PSETI Center we maintain three bibliographic resources for the community: a monthly mailer1 of SETI papers (found via an ADS query we make regularly), an annual review article, and a library at ADS of all SETI papers.

Dr. Villarroel wrote a paper with Marcy as a second author which does not mention SETI directly, but obliquely via the question of whether images of Earth satellites appear in pre-Sputnik photographic plates. This paper did not appear in our monthly SETI mailer, and Dr. Villarroel contacted me directly to ask that it appear in the following month’s mailer.

I declined. As I wrote:

Hi, Beatriz.Thanks for your note.

Your paper slipped through our filter because it doesn’t mention SETI at all, or even any of the search terms we key on. Did you mean to propose an ETI explanation for those sources? At any rate, if you mean for it to be a SETI paper we can add it to the SETI bibgroup at ADS so it will show up in SETI searches there (especially if there are any followup papers regarding these sources or this method).

As for SETI.news, that is a curated resource we provide as a service to the community, and we have decided that we don’t want to use it to promote Geoff Marcy’s work. This isn’t to say that we won’t include any papers he has contributed to, but this paper has him as second author and, since I know his style well, I can tell he had a heavy hand in it.

Best,

Jason

Dr. Villarroel has taken exception to my message, saying that it implies she “wasn’t the brain behind [her] own paper.” I have also learned that Dr. Villarroel feels this implication is sexist.

My meaning here was simply that Marcy’s name on an author list wasn’t an automatic bar from us considering it—we were specifically concerned with recent work he had made substantive (and not merely nominal) contributions to. I think the first part of the offending sentence makes this clear. As someone who worked very closely with Marcy for many years (and as someone who is familiar Dr. Villarroel’s other work) I felt that I could tell that he had more than a nominal role in the work behind the paper. I felt that this and his place on the author list justified that paper’s exclusion from the mailer.

But while it was certainly not my meaning, I do acknowledge the insidious and sexist pattern of presuming that papers led by women must not be primarily their own work, and that men on the author list—especially senior men—must have had an outsized role in it. Now that Dr. Villarroel has pointed this out, I do regret my choice of words, acknowledge the harm they’ve caused, and here apologize to Dr. Villarroel for the implication. For the record: I do believe that paper was led by Dr. Villarroel and is primarily hers.

Dr. Villarroel

While I obviously disagree with some of Dr. Villarroel’s interpretations of these events, I don’t think she has publicly misrepresented them. Others have, however, perpetuated misinformation in the matter.

Specifically, I want to make clear that Dr. Villarroel was never “banned” from any PSETI-related conference, and Dr. Villarroel is not being punished for her associations with Marcy with our codes of conduct. The prohibitions at the PSETI symposia are targeted at harassers, and include work they substantially contribute to. Dr. Villarroel is welcome to attend these conferences in any event, and to present any research that does not involve Marcy. She and her work have not been “cancelled”, and her work with Marcy appears in the SETI bibliography we maintain.

I also want to acknowledge the large power differential between me and Dr. Villarroel. I understand that I have some power to shape the field and her career, while she has almost none over my career. It is for this reason that I have avoided discussing her in public up to this point, or initiating any engagement with her at all. If she were more senior I would certainly have defended our actions and pushed back on her characterizations of me and the PSETI Center sooner.

At any rate, I do not bear her any ill will and I absolutely do not condone any harassment of her. That said, I understand why people are upset that she would continue to work with Marcy, and they are entitled to express that displeasure, even in potentially harsh terms, especially in private or non-professional fora, as long as they are not “punching down,” doing anything to demean, intimidate, or humiliate her, or sabotaging her work.

The Order of the Octopus SOC

I also want to acknowledge the large power differential between many of the PSETI Center’s critics and the chairs and the organizing committees of our various conferences that have contributed to the Codes of Conduct, many of whom are ECRs. This is another reason that I am responding here: to give voice to those who have far less power than I that are being attacked.

Critiques of the PSETI Center’s actions here should therefore be directed at me: I am the center director and conference chair of both symposia, and I take full responsibility for our collective actions here.

What Sorts of Codes of Conduct are Acceptable

Many have argued our bar against harassers’ work is completely inappropriate, being both unfair to Marcy and even more unfair to his innocent co-authors. I disagree, and argue that it is in fact an appropriate way to protect vulnerable members of the community who are disproportionately harmed by sexual harassment and predation.

As an aside, I note that the PSETI Center is not alone in this position; it is also consistent with our professional norms. I would point to the AAS Code of Ethics which includes a ban from authorship in AAS Journals as a potential sanction for professional misconduct. Such a sanction is analogous to a ban from authorship on conference abstracts. It is true that this ban also affects innocent co-authors, but a harasser should not be able to evade a ban by gaining co-authors. That is not guilt-by-association for the co-authors; it is a consequence of a targeted sanction. It is certainly not harassment of those co-authors.

I admit I find this whole episode to be somewhat confounding. A small group of ECRs got together to hold a meeting and had a no-harasser rule, this was enforced, and now years later it’s the subject of a huge thread on the IAA SETI community mailing list, the subject of Lawrence Krauss blog posts, the basis of an award by the Heterodox Academy, and creating so much drama that I need to address it here.

I also find it ironic that many complaining about the Order of the Octopus being selective about who they decide to interact with at their own conference are ostensibly doing so to protect the principle of…freedom of academic association. To be clear: Dr. Villarroel is free to collaborate with Marcy or anyone else she chooses. This is a cornerstone of academic freedom. Are the members of the Order of the Octopus not equally free to dictate the terms of their own collaborations and the scope of their own meeting, and to select abstracts as they see fit? Freedom of association must include the freedom to not associate, or else it would be no freedom at all.

Now, I acknowledge that there are limits to this freedom: one should not discriminate on matters that have nothing to do with science, especially against minoritized people. But that’s not what’s going on here: Marcy’s behavior is worthy of sanction, and our sanctions are entirely focused on harassers like him and their research, and only to protect vulnerable members of the community. As I wrote, Dr. Villaroel is not guilty by association, and is welcome at future PSETI symposia, provided she abides by the Code of Conduct.

As for what behavior is appropriate towards those, like Dr. Villarroel, who choose to work with Marcy and the like, I think this is nuanced. Especially in large organizations, we should honor people’s freedom of association and in general this means those people should not lose roles or jobs for this choice alone. There should be no guilt by mere association, especially by past association—indeed, as a longtime collaborator of Geoff’s, including for years after his retirement and downfall, I am particularly sensitive to this point.

But the choice to work with Marcy will have inevitable consequences. If you are working with him, many people will rightly not want to do work with you that might involve them with him, and there are excellent reasons why one might avoid working with those who have an official record of sexual harassment violations. My students are wary of working with groups that involve Marcy, because this had led to students finding themselves on conference calls with Marcy, finding themselves on author lists with him, and getting emails from him as part of the collaboration. For me to honor my students’ freedom to not associate with Marcy, I have discovered the hard way that I need to be very careful with anyone working with him, and that I must turn my own interactions with him down to zero.

Affirmative Defense of our Codes of Conduct

At any rate, we’ve done nothing wrong. We’ve decided where we at the PSETI Center will draw the line on notorious sexual harassers like Marcy and I am confident it is the right choice for us. Other meetings and organizations will deal with this in their own way that might be different from or very similar to ours, but either way I’m confident that the majority of astronomers are comfortable with the choice we’ve made.

There is a troubling lack of empathy for the victims of sexual harassment in these abstract discussions about academic freedom. When a notorious harasser’s face and name and work pops up in a talk, we need to remember their victims may be in the audience. Other victims may be in the audience. Allowing that to happen sends a message to everyone about what we, as a community, will tolerate, and whose interests we prioritize.

And the attacks on our code of conduct and the stance we have taken continue to do harm. The ECRs that helped write and enforce these codes are reminded that no matter how badly an astronomer acts, there will always be other astronomers there to apologize for them, to ask or even demand that their victims forgive them, to accept them back into the fold, to act like nothing happened, to insist that only a criminal conviction should trigger a response, to question, resist, and critique sanctions, and to attack astronomers that would insist otherwise.

If we, as a community, claim that we won’t tolerate sexual harassment, we need to show that we mean it by enforcing real sanctions that seek to keep our astronomers feeling safe. If we can’t do that for as clear and notorious a case as Geoff Marcy, then we can’t do it at all, and we will watch our field hemorrhage talent.

I am grateful to the many astronomers and others that passed along words of support to our ECRs as this criticism has rained in. I hope in the future more astronomers, especially senior ones, will speak up publicly to defend a strong line against sexual harassment in our community and show with their actions, voices, and platforms that all astronomers can be safe in our field.

1I should have been more precise in my language. James Davenport the sole owner and operator of the seti.news website and mailer. For a while I and other PSETI Center members supplied the data that populated it (we haven’t had the bandwidth for a while now, but hope to start up again soon). This is why the issue of Dr. Villarroel’s paper went through me. You can read Jim’s position on the topic here:

In the Astro 576: “The Search for Extraterrestrial Intelligence” graduate course at the PSU Dept. of Astronomy and Astrophysics, I participated in a collaboration with over a dozen colleagues to examine a parallel question; might an extraterrestrial intelligence (ETI) be using an SGL scheme to build an interstellar transmission network? If so, we might be able to detect the transmitting spacecraft if its transmissions intersect the Earth’s orbit (as proposed by

In the Astro 576: “The Search for Extraterrestrial Intelligence” graduate course at the PSU Dept. of Astronomy and Astrophysics, I participated in a collaboration with over a dozen colleagues to examine a parallel question; might an extraterrestrial intelligence (ETI) be using an SGL scheme to build an interstellar transmission network? If so, we might be able to detect the transmitting spacecraft if its transmissions intersect the Earth’s orbit (as proposed by  This has implications for local artifact SETI searches. While the Sun has several perturbations (mostly the reflex motion from Jupiter), it is a much better host for an SGL than a star with a close binary companion or a close-in giant planet. Close binary systems like Alpha Centauri and Sirius are terrible hosts for SGL spacecraft because of the reflex motions from the other stars in the systems. If we are trying to detect an SGL interstellar transmission network, we could focus on nearby stars that are unperturbed by massive planets, like Proxima, Barnard’s Star, or Ross 154.

This has implications for local artifact SETI searches. While the Sun has several perturbations (mostly the reflex motion from Jupiter), it is a much better host for an SGL than a star with a close binary companion or a close-in giant planet. Close binary systems like Alpha Centauri and Sirius are terrible hosts for SGL spacecraft because of the reflex motions from the other stars in the systems. If we are trying to detect an SGL interstellar transmission network, we could focus on nearby stars that are unperturbed by massive planets, like Proxima, Barnard’s Star, or Ross 154. On a human timescale, this is a long time; Voyager 2, our longest-lived active probe, is 44 years old, and there are obviously other challenges to operating autonomously for such a long period. In artifact SETI, ten thousand years is a blink of an eye. The universe has existed for billions of years, which means that an ETI might have activated their relay spacecraft around the Sun millions of years ago. We could only detect it actively transmitting if it has survived and maintained alignment for the whole time.

On a human timescale, this is a long time; Voyager 2, our longest-lived active probe, is 44 years old, and there are obviously other challenges to operating autonomously for such a long period. In artifact SETI, ten thousand years is a blink of an eye. The universe has existed for billions of years, which means that an ETI might have activated their relay spacecraft around the Sun millions of years ago. We could only detect it actively transmitting if it has survived and maintained alignment for the whole time. The idea is that if you do a search and find nothing, you need to let people know what it is that you did not find so that we can chart progress, draw conclusions, and set markers down for what the next experiment should look like. My analogy is the dark matter particle detection community, which (similarly to SETI) must solve the funding paradox of using a lack of results to justify continued funding.

The idea is that if you do a search and find nothing, you need to let people know what it is that you did not find so that we can chart progress, draw conclusions, and set markers down for what the next experiment should look like. My analogy is the dark matter particle detection community, which (similarly to SETI) must solve the funding paradox of using a lack of results to justify continued funding.