We have all heard that 8 hours is the “recommended” time for a good night’s sleep, but that’s hard to come by in college. So I wondered, is that recommendation accurate? Is there really a difference between getting 7 hours of sleep and 8 hours? Apparently there is because new research has supported the idea that less than 8 hours of sleep is actually optimal.

Who is “in charge” of telling us how much sleep we really need? The National Sleep Foundation is obviously a big, and respected, group when it comes to talking about how much sleep people n eed.} Recently, the National Sleep Foundation released the results from a world-class two-year sleep study, which is an update on their guidelines on how much sleep each age group really needs. The panel of researchers consisted of 18 leading scientists and researchers, as well as 6 sleep specialists. This panel reviewed over 300 current scientific publications (meta-analyses) and voting on the appropriate amount of sleep required. Basically, this study was the big kahuna of sleep studies. The National Sleep Foundation does qualify their study saying that an exact amount of sleep needed for different age groups cannot be pinpointed, but there are recommended windows. They also point out it is important to pay attention to the individual, such as health issues, caffeine dependence, always feeling tired, and more that could change the recommended sleep for a specific person. The panel released the results in the chart above.

eed.} Recently, the National Sleep Foundation released the results from a world-class two-year sleep study, which is an update on their guidelines on how much sleep each age group really needs. The panel of researchers consisted of 18 leading scientists and researchers, as well as 6 sleep specialists. This panel reviewed over 300 current scientific publications (meta-analyses) and voting on the appropriate amount of sleep required. Basically, this study was the big kahuna of sleep studies. The National Sleep Foundation does qualify their study saying that an exact amount of sleep needed for different age groups cannot be pinpointed, but there are recommended windows. They also point out it is important to pay attention to the individual, such as health issues, caffeine dependence, always feeling tired, and more that could change the recommended sleep for a specific person. The panel released the results in the chart above.

The panel revised the sleep ranges for all six children and teen age groups. They also included a new category (younger adults 18-25). See this website for the exact ranges that changed.

While this may be a credible source, this is only one source. The National Heart, Lung, and Blood Institute also published their own findings findings of how much sleep the average person should get. Their recommended amount of sleep was very consistent. Both being very credible sources and very similar in recommended sleep time, this similarity shows that this is a very good approximation to how much sleep each age group should spend sleeping.

The range for hours of sleep each night for people aged 18-64 is 7-9 hours. While 7 is included in the range, should it be focused on more and used as a target? Several sleep studies have been finding that seven hours is the optimal sleep time, not 8.

There might be other benefits to sleeping 7 hours of sleep and not sleeping more. Shawn Youngstedt, professor in the College of Nursing and Health Innovation at ASU Phoenix claims the lowest mortality and morbidity is through 7 hours of sleep each night. He also claims that 8 or more hours have even been shown to be hazardous. While he is a professor of health, I couldn’t find the evidence with his study, just his claim. It could be considered somewhat credible because health is his profession, but without his data and experiment design (if he even did one) this can’t be credible. To check out if Youngstedt had any validity to his claim, I searched for some other studies.

Professor of psychiatry at UCSD Daniel F. Kripke spent 6 years tracking 1.1 million people participating in a large cancer study. These 1.1 million men and women ranged in ages from 30 to 102 years old. This is important to note because it studied a very wide age group, but did not focus on any children categories or even the young adult category (from the National Sleep Foundation). This 2002 observational study controlled 32 health factors. This 2002 observational study controlled 32 health factors. This is also important to note because this doesn’t allow other health factors to skew results. The study reported that people who slept 6.5 to 7.4 hours had a lower mortality rate than those with shorter or longer sleep. To conclude: this study found strong correlation that sleeping 6.5 to 7.4 hours had the lowest mortality rate. (Sidenote: this is an amazingly detailed study, and I would really recommend checking out the site attached because there is so much information and it is all super interesting)

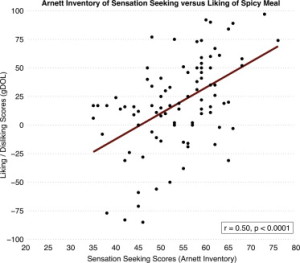

So does sleeping less than the “golden” 8 hours actually help us perform better in cognitive testing and overall mental performance? A 2013 study tested participants through a cognitive-training website Lumosity. Researchers obtained survey data from users of Lumosity between March 2011 and January 2012. Users participated in three types of tests available through Lumosity: Speed Match (given 45 seconds, matching task where users respond if the current object matches the previous object shown), Memory Matrix(shows users a pattern of squares on a grid and users are then asked to recall the pattern after a delay), and Raindrops (a rapid arithmetic test with various arithmetic problems appear at the top of the screen, users must answer problem before the problem makes it to the bottom of the screen). See the graphic to the left to show the results of the  study (I don’t know why it’s blurry but the top row of graphs peak at 7 hours, I promise. The bottom set of graphs is irrelevant to this blog post, but check out the study link above to learn about more of their findings). In each of the three tests, participants’ peak performance occurred when the user reported 7 hours of sleep. Showing this consistency in not one but all three test indicates a very strong correlation that 7 hours of sleep is best for peak cognitive function. Because the peak performance occurred at 7 hours, it also supported that increasing sleep was not anymore beneficial to cognitive testing.

study (I don’t know why it’s blurry but the top row of graphs peak at 7 hours, I promise. The bottom set of graphs is irrelevant to this blog post, but check out the study link above to learn about more of their findings). In each of the three tests, participants’ peak performance occurred when the user reported 7 hours of sleep. Showing this consistency in not one but all three test indicates a very strong correlation that 7 hours of sleep is best for peak cognitive function. Because the peak performance occurred at 7 hours, it also supported that increasing sleep was not anymore beneficial to cognitive testing.

Now let’s relate to all of you sleep deprived college students. College sleeping is all about inconsistency. Whether your out studying super late in the library, out late at a party, waking up early to tailgate, or even pulling an all nighter to study, one night of 7 hours isn’t magic. Seven hours of sleep isn’t going to miraculously solve all of your problems, clear all exhaustion, and increase your lifespan. While college is all about inconsistency, you need 7 hours of sleep every night to reap the benefits of the 7 hours. So party hard, study hard, sleep in late, but to get the benefits of the 7 hours, it needs to be done consistently.

To conclude: These two studies both very strongly support that sleeping 7 hours, instead of the much more commonly hear 8 hours, might prove to be beneficial in the long run for increased mortality and better cognitive functioning. But like I said before to all you crazy, sleep-deprived college students, the 7 hours needs to be consistent for it to be beneficial. While there are certainly going to be some nights 7 hours of sleep is really impossible, aim for it. If it’s between that Netflix marathon and getting 7 hours of sleep, because it will be a step in the right direction towards consistency, which can lead to higher mortality levels and improved cognitive function. So SC200 students, aim for a consistent 7 hours every night (and maybe when you’re scheduling classes consider taking your sleep schedule into mind and avoid those 8AMs for an extra hour or two).

eech of their first language conflict with their pronunciation of their second. Younger students are allowed to get a “pass” because they can’t pronounce a word, yet if a high school or college student made the same language mistake it would not be as easily looked over. The older one gets, the more grammar and sentence structure starts to matter, which seems to “unintentionally” penalize adults and older students when making mistakes about learning a language. This can ultimately be discouraging. Abbott also points out that younger learners are naturally more curious about learning, allowing easier engagement with the language. Younger children are also more accepting of people from other cultures or other language speakers before they are given certain stigmatisms about certain cultures and races.

eech of their first language conflict with their pronunciation of their second. Younger students are allowed to get a “pass” because they can’t pronounce a word, yet if a high school or college student made the same language mistake it would not be as easily looked over. The older one gets, the more grammar and sentence structure starts to matter, which seems to “unintentionally” penalize adults and older students when making mistakes about learning a language. This can ultimately be discouraging. Abbott also points out that younger learners are naturally more curious about learning, allowing easier engagement with the language. Younger children are also more accepting of people from other cultures or other language speakers before they are given certain stigmatisms about certain cultures and races.