http://catalyst.navigator.nmc.org/gallery/

Future of Learning Environments

Josephine Hofmann & Anna Hoberg

- Workplace learning changing requirements

- learning has to be a part of daily work (not rigid training)

- just-in-time learning

- Learning 2.0 – learning w/in working process, requires more from learners and from learning design

- Focus for Design Grid

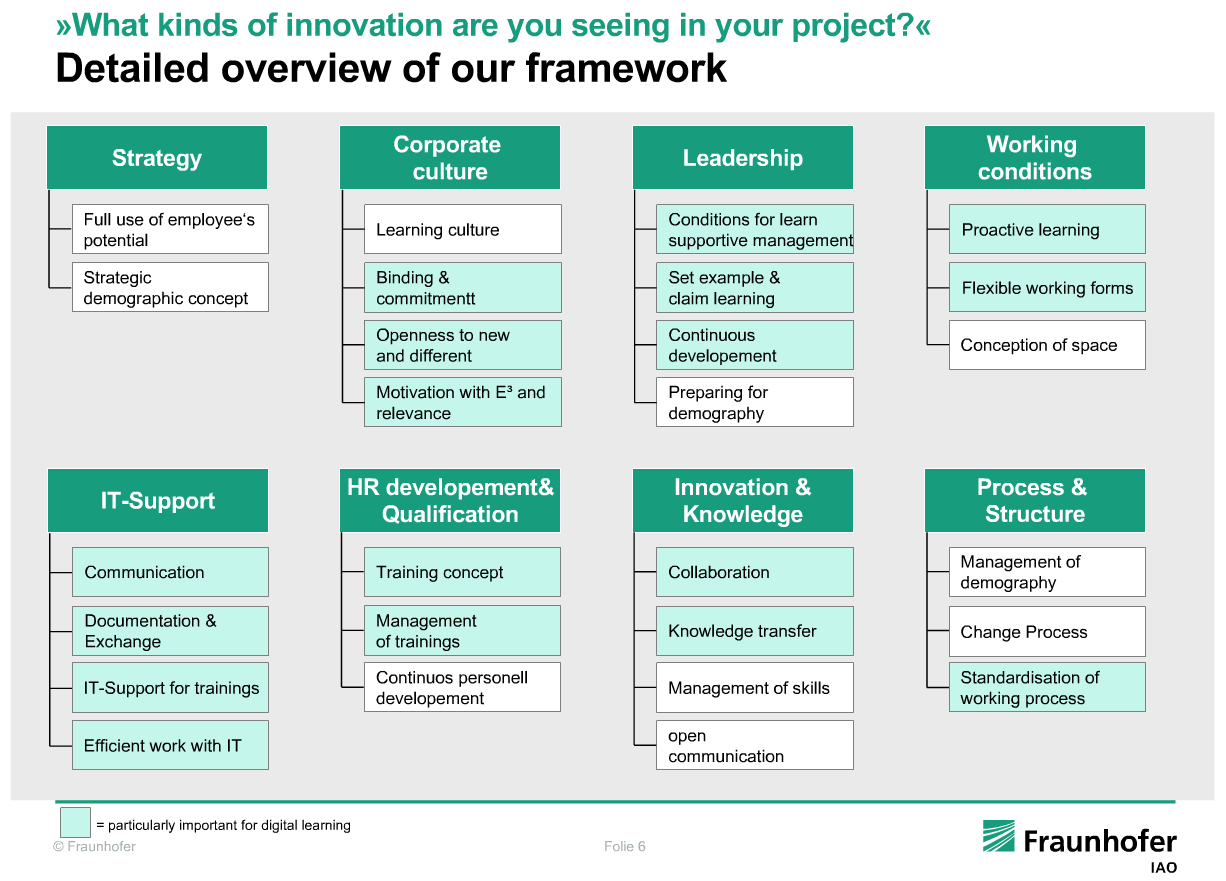

- Management Audit Framework – looking at multiple aspects of a company before designing learning, conducts interviews, the create a complex graphic to summarize their findings

- Learning 2.0 wrap-up: “assure future innovation, offers approaches for the demographic change, prepare employees for a dynamic, permanent changing environment”

- big challenge: cultural change

Hands-on Info Tech Virtual Lab Powered by Cloud Computing

Peng Li, East Carolina University

- HP catalyst project team

- large DE student population (about 100 students)

- abstract: secure, scalable, remote lab learning environment allows for learning anytime and anywhere

- installed HP servers, virtual labs, application image library

- physical labs are too difficult to maintain

- 1 server can replace multiple hardware computers

- decentralized – students install their own, need powerful computers and instructors cannot monitor work/provide help

- centralized approach – using multiple cloud systems, on-demand, highly scalable

- visualization is not simulation (SL = virtual world simulator), real IT applications

- reservation system on Blade Server

- setting up and maintaining a cloud computing system is not easy

- assessment: most like virtual labs, helped to understand topics, develop hands-on skills, easy to monitor, easy to seek help, collect resource data

- spread due dates… reduces load, use in evening

- high speed internet and firefox required

- space and memory is required to support more students

Computation Chemistry Infrastructure

Isaac K’Owino

- audio problems – great opening video

- virtual chemistry tools VLab 1.6.4 and ChemLab 2.0

- http://www.modelscience.com/products.html?ref=home&link=chemlab

- grad, undergrad, and HS students work together

- encourages hands-on experience

- students don’t need real labs if they have these virtual labs to learn

- awesome collaboration and opportunities to make huge impacts

- http://irydium.chem.cmu.edu/find.php

Reflections

I logged on today to specifically tune into the presentation on virtual labs powered by cloud computing. It was a very interesting presentation and I wonder if there are aspects of this project that we could benefit from here at IST or elsewhere around PSU. We’re already using virtual labs at IST, but I’ve heard that scalability is an issue and concerns that we’re starting to use demonstrations over visualization.

The project that really grabbed my attention was the last presentation from Isaac in Kenya. Although there were audio problems to begin with, I was impressed with the work Isaac has been doing with collaboration from around the world and the awesome impact they appear to be having with HS, undergrad and graduate-level students.